# Useful Links

# RL Characteristics

- No supervisor, only reward signal

- Feedback is delayed, not instantaneous

- Time matters (sequential, non IID data)

- Agent's actions affect the subsequent data it receives

# History

- The history can be represented by

where A stands for action, O stands for observation and R stands for reward.

The history determines what happens next, whether that is the agent picking an action or the environment selecting observation/rewards

But it is not very useful cause we do not want to go back through the entire history to make a decision.

# State

This is where state comes into play, it has a summary of the history that can be used for making decisions moving forward.

- This state is the agent's internal representation, not the environment's state since that is not accessible to the agent.

- i.e. whatever info the agent uses to pick the next action

- i.e. it is the info used by RL learning algorithms

# Information state

An information state (aka Markov state) contains all useful information from the history.

The future is independent of the past given the present.

You can throw away everything that came before, just keep present state and that characterizes future actions.

The environment's state is Markov, the history is Markov. And now we need to ensure the agent's state is Markov as well.

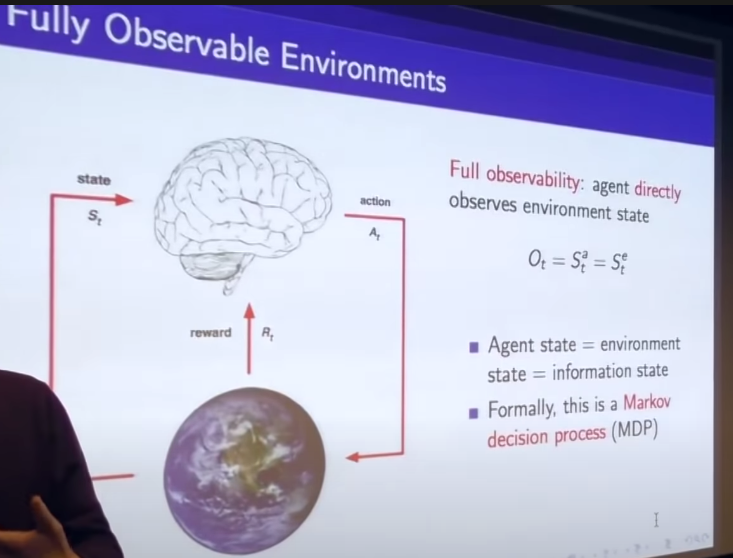

# Fully observable environments

# Partially observable environments

Some examples

- a robot with camera vision isn't told its absolute location

- A trading agent only observes current prices

In this case, agent state != environment state.

Formally, this is Partially Observable Markov Decision Process (POMDP)

In this, agent has to construct its own state representation.

- Could use complete history

- Could use beliefs of environment state using probability

- Recurrent Neural Network

# Major Components of RL Agent

An RL agent may include one or more of these components (not an exclusive list):

# Policy

Agent's behavior function. A map from state to action

- Determinstic Policy:

. a function that maps - Stochastic Policy:

. Helps make random decisions and explore

# Value function

- expected return if you start in a particular state, how much reward would you earn in the future if you were dropped into this state of markov process

- Used to evaluate goodness/badness of states

- to select actions

- to predict future reward

# Model

agent's representation of the environment that predicts what the environment will do next

- Transitions: P predicts the next state (i.e. dynamics)

- Rewards: R predicts the next (immediate) reward

# Learning and Planning

Two fundamental problems in sequential decision making

- Reinforcement Learning

- Environment initially unknown

- Agent interacts with environment

- Agent improves policy

- Planning

- Model of environment is known

- Agent performs computation with model (without external interaction)

- Agent improves its policy aka search, deliberation

# Prediction vs Control

- Prediction: Evaluate the future given a policy

- Control: Optimize the future by finding the best policy

Basics →