# MDP

David Silver's RL Course Lecture 2 shows a really nice intuitive example of Markov Chain

# Discount Factor

The discount factor essentially determines how much the reinforcement learning agents cares about rewards in the distant future relative to those in the immediate future.

- If 𝛾=0, the agent will be completely myopic and only learn about actions that produce an immediate reward.

- If 𝛾=1, the agent will evaluate each of its actions based on the sum total of all of its future rewards.

Most Markov reward and decision processes are discounted. The reasons are:

- Mathematicaly convenient to discount rewards

- Avoid infinite returns in cyclic MDPs

- if the reward is financial, immediate rewards may earn more interest than delayed rewards

- Animal/human behavior shows preference for immediate reward

# Bellman Equation for MRPs

Expected Reward in current step = Immediate reward in next step + Expected reward in the next step

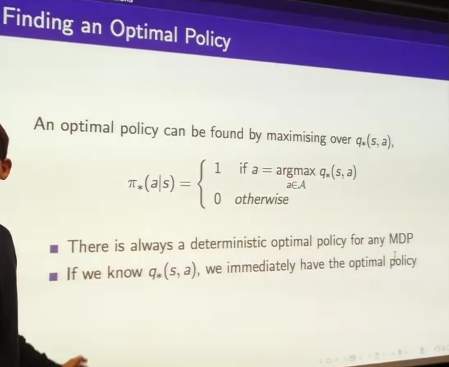

# Optimal Policy

David explains this concept with an example in lecture 2 around 1:19:00

# Bellman Optimality Equation for Q*

This is the bellman equation version that is more commonly referenced, not the one above.